Is It Time to talk Extra About Deepseek?

페이지 정보

작성자 Mildred 작성일25-02-19 01:28 조회7회관련링크

본문

At first we started evaluating fashionable small code models, but as new fashions saved appearing we couldn’t resist adding DeepSeek Coder V2 Light and Mistrals’ Codestral. We additionally evaluated popular code fashions at completely different quantization levels to find out which are best at Solidity (as of August 2024), and compared them to ChatGPT and Claude. We further evaluated a number of varieties of every mannequin. A larger mannequin quantized to 4-bit quantization is better at code completion than a smaller model of the same variety. CompChomper makes it simple to evaluate LLMs for code completion on duties you care about. Partly out of necessity and partly to more deeply perceive LLM evaluation, we created our personal code completion evaluation harness called CompChomper. Writing a good evaluation may be very tough, and writing an ideal one is not possible. DeepSeek hit it in a single go, which was staggering. The accessible information units are also usually of poor quality; we checked out one open-source coaching set, and it included extra junk with the extension .sol than bona fide Solidity code.

At first we started evaluating fashionable small code models, but as new fashions saved appearing we couldn’t resist adding DeepSeek Coder V2 Light and Mistrals’ Codestral. We additionally evaluated popular code fashions at completely different quantization levels to find out which are best at Solidity (as of August 2024), and compared them to ChatGPT and Claude. We further evaluated a number of varieties of every mannequin. A larger mannequin quantized to 4-bit quantization is better at code completion than a smaller model of the same variety. CompChomper makes it simple to evaluate LLMs for code completion on duties you care about. Partly out of necessity and partly to more deeply perceive LLM evaluation, we created our personal code completion evaluation harness called CompChomper. Writing a good evaluation may be very tough, and writing an ideal one is not possible. DeepSeek hit it in a single go, which was staggering. The accessible information units are also usually of poor quality; we checked out one open-source coaching set, and it included extra junk with the extension .sol than bona fide Solidity code.

What doesn’t get benchmarked doesn’t get consideration, which means that Solidity is uncared for in terms of giant language code fashions. It could also be tempting to look at our results and conclude that LLMs can generate good Solidity. While commercial fashions just barely outclass native fashions, the outcomes are extraordinarily shut. Unlike even Meta, it is actually open-sourcing them, permitting them to be utilized by anyone for industrial purposes. So while it’s exciting and even admirable that Deepseek free is building powerful AI models and offering them up to the general public totally Free DeepSeek r1, it makes you surprise what the corporate has planned for the longer term. Synthetic data isn’t a whole solution to finding more training knowledge, however it’s a promising strategy. This isn’t a hypothetical difficulty; we have encountered bugs in AI-generated code throughout audits. As always, even for human-written code, there isn't a substitute for rigorous testing, validation, and third-party audits.

What doesn’t get benchmarked doesn’t get consideration, which means that Solidity is uncared for in terms of giant language code fashions. It could also be tempting to look at our results and conclude that LLMs can generate good Solidity. While commercial fashions just barely outclass native fashions, the outcomes are extraordinarily shut. Unlike even Meta, it is actually open-sourcing them, permitting them to be utilized by anyone for industrial purposes. So while it’s exciting and even admirable that Deepseek free is building powerful AI models and offering them up to the general public totally Free DeepSeek r1, it makes you surprise what the corporate has planned for the longer term. Synthetic data isn’t a whole solution to finding more training knowledge, however it’s a promising strategy. This isn’t a hypothetical difficulty; we have encountered bugs in AI-generated code throughout audits. As always, even for human-written code, there isn't a substitute for rigorous testing, validation, and third-party audits.

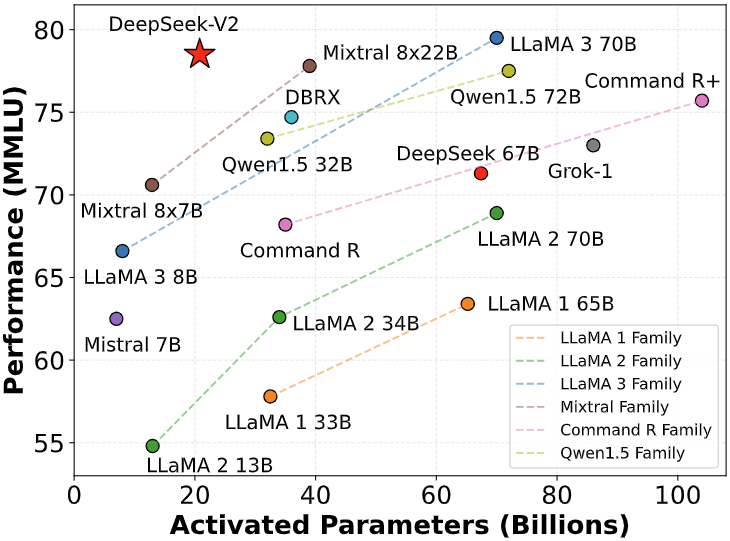

Although CompChomper has solely been tested towards Solidity code, it is largely language impartial and may be easily repurposed to measure completion accuracy of other programming languages. The whole line completion benchmark measures how accurately a mannequin completes a complete line of code, given the prior line and the subsequent line. Essentially the most interesting takeaway from partial line completion outcomes is that many native code fashions are better at this job than the large commercial fashions. Figure 4: Full line completion outcomes from standard coding LLMs. Figure 2: Partial line completion outcomes from popular coding LLMs. DeepSeek demonstrates that prime-high quality outcomes could be achieved by software optimization fairly than solely counting on costly hardware resources. The DeepSeek crew writes that their work makes it doable to: "draw two conclusions: First, distilling extra powerful fashions into smaller ones yields excellent results, whereas smaller fashions relying on the big-scale RL mentioned on this paper require enormous computational energy and will not even obtain the efficiency of distillation.

Once AI assistants added assist for native code fashions, we instantly wanted to guage how nicely they work. This work additionally required an upstream contribution for Solidity assist to tree-sitter-wasm, to profit other development instruments that use tree-sitter. Unfortunately, these tools are sometimes unhealthy at Solidity. At Trail of Bits, we both audit and write a fair little bit of Solidity, and are fast to use any productiveness-enhancing tools we can find. The data safety dangers of such expertise are magnified when the platform is owned by a geopolitical adversary and could represent an intelligence goldmine for a country, specialists warn. The algorithm seems to search for a consensus in the data base. The analysis community is granted access to the open-source variations, DeepSeek LLM 7B/67B Base and DeepSeek LLM 7B/67B Chat. Patterns or constructs that haven’t been created before can’t yet be reliably generated by an LLM. A situation where you’d use that is once you type the title of a function and would just like the LLM to fill within the function body.

댓글목록

등록된 댓글이 없습니다.